* equal contribution

Published in proceedings of ICML 2020

Links 👉 Github Code

, ICML Paper

, Cite BibTex

Media 📰

Twitter, BAIR blog

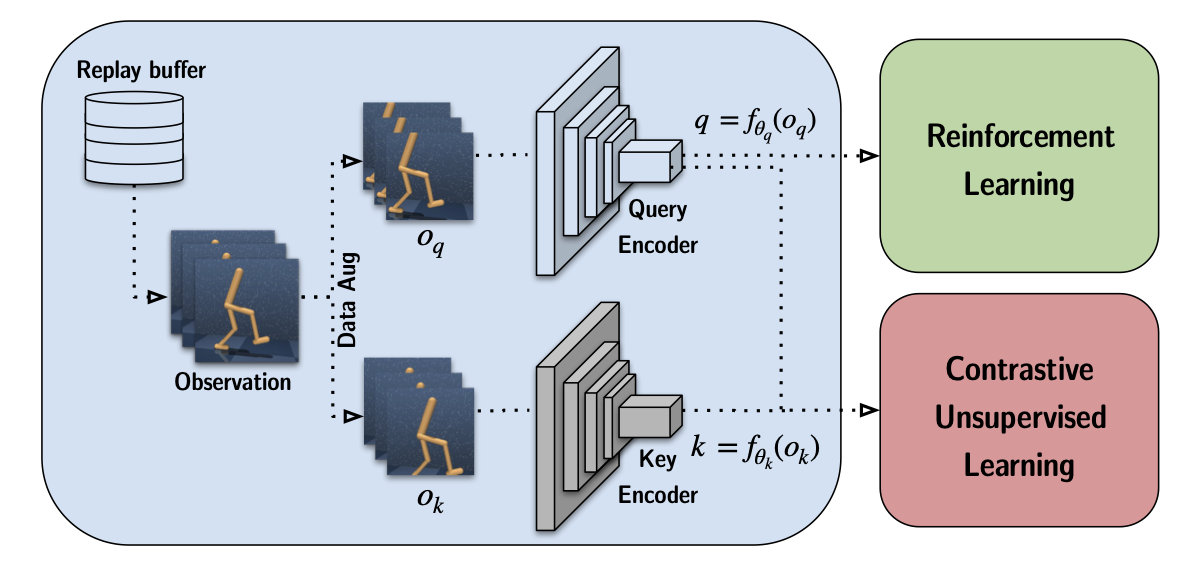

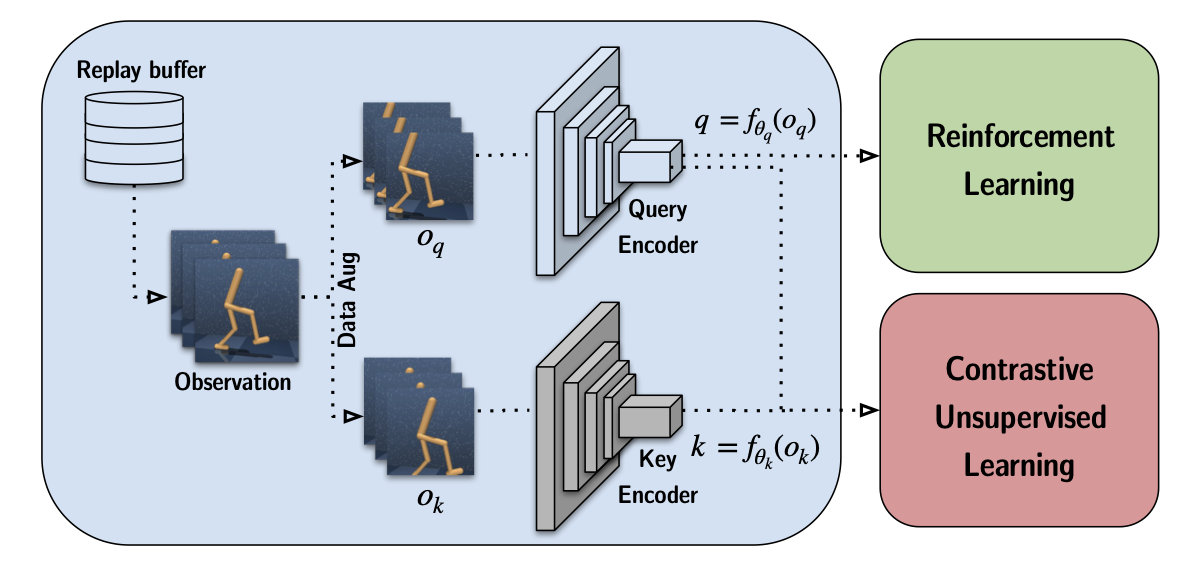

We present CURL: Contrastive Unsupervised Representations for Reinforcement Learning. CURL extracts high-level features from raw pixels using contrastive learning and performs offpolicy control on top of the extracted features. CURL outperforms prior pixel-based methods, both model-based and model-free, on complex tasks in the DeepMind Control Suite and Atari Games showing 1.9x and 1.2x performance gains at the 100K environment and interaction steps benchmarks respectively. On the DeepMind Control Suite, CURL is the first image-based algorithm to nearly match the sample-efficiency of methods that use state-based features.

CURL learns contrastive representations jointly with the RL objective. The representation learning is done as an auxiliary task that can be coupled to any model-free RL algorithm. In our paper, we combine contrastive representation learning with two state of the art algorithms (i) Soft Actor Critic (SAC) for continuous control and (ii) Rainbow DQN for discrete control. Contrastive representations are learned by specifying an anchor observation, and then maximizing / minimizing agreement between positive / negative pairs through Noise Contrastive Estimation. A high-level diagram of CURL is shown below.

Short video demonstrating efficient learning with CURL on DeepMind control.